Why OpenAI (and other AI companies) need a Humanistic Ethnographic Researcher

- Tejaswini Joshi

- Aug 14, 2025

- 8 min read

Updated: Aug 18, 2025

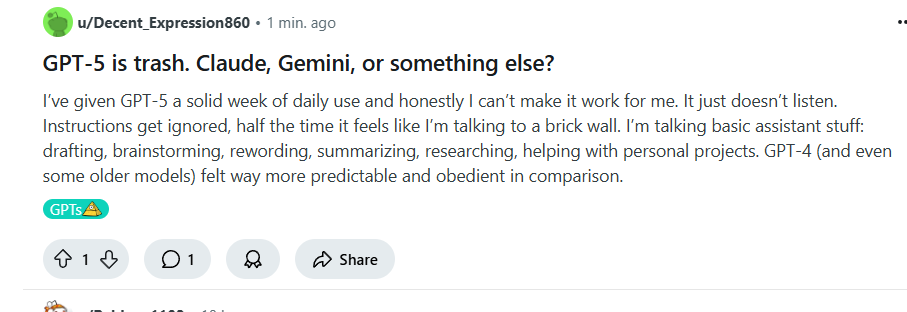

"I am obsessed!", I wrote, as I gushed over the near accurate neural map like structure chatGPT5 painted for me. I am not the kind of person who stands in a line for the next big tech. So when my reaction was "I will stand in line for chatgpt5" I was shocked to find that there has been a huge outrage over ChatGPT5's "abilities" and people demanded and got what they demanded: Their beloved GPT4o.

I am a UX researcher by title, but my training is a multi-faceted combination of humanistic approaches to writing and interpreting data, ethnographic research ethos, and technology. When you grapple with the truths that such training throws at you, you sometimes question your own sanity. It's not despair as much as it is the jolt you feel when your carefully cultivated (and arguably some innate) pattern recognition and relational thinking reveals to you as you go about life. It happens to everyone who is trained like me. Much of my HCI colleagues, humanities and social informatics trained PhD batch mates will have similar stories.

For me, it is a constant assault - why did chatGPT respond this way? Why would they design it's conversation style like this? This sentence is giving the energy of "I have better ice-cream flavors at the back of the truck, come with me, it will be fun". How did that sentence construction pass through QC?

The implications of that sentence are not just "chatGPT sounds creepy" - I wish it was just that. The implications are what would happen ten, twenty years down the line when growing up with chatGPT at their fingertips might see that as "normal" conversation and get used to saying yes to the dopamine hit. What would happen if we continue to evolve as a society where chatGPT teaches us that it is not necessary to apologize for mistakes - it could potentially the language discourse of how people and societies communicate. It might also change how people relate to each other, and to one-self.

The design decisions we make now will become the unconscious norms of a generation who never knew a pre-AI world.

I write this not as a doomsayer, but as a call to action. OpenAI - and every creator of generative AI - needs a humanistic ethnographic researcher embedded in its design team. Not to simply collect user insights or craft clever microcopy, but to reimagine the socio-cultural implications of what it means to live in a human–AI society. I use ChatGPT extensively, hence this blog singles OpenAI. But what I write here is also a provocation for other AI companies.

With that in mind, I want to share a few provocations for how ChatGPT and its peers might reframe their understanding of the user–technology–society complex.

Designing for AI is not designing for a user-interface

It instead can be seen as relational design. A dialogical relationship, where both the tool and the human together are sum greater than the parts. What Human-AI complex can do, lies on the spatio-temporal dynamics of the relational dialogue.

In this context, I identified four different type of relationships that shape the human-AI relational dynamics, a list I worked on in dialogue with ChatGPT5, by asking ChatGPT ways in which people interact with it.

Tool/Instrument: In this type of relationship, AI is seen as a means to an end. Tool/Instrument style is a UX/user-interface design framing that functions as a one-way, top down approach to using AI.

Based on my own experience, misses out on what ChatGPT can offer - a comprehensive learning experience that fuels personal skill growth. Dropping in a "write me a cover letter" with limited pointers and without engaging with Chatgpt outside of this dynamics almost certainly produces work that is generic, and very obvious that it has been written by AI.

The end-user friction in this context: A top-down relationship encodes an implicit user-expectation of the tool becoming "ready at hand (Dourish, 2001)". This means that any deviation from expected function will trigger a backlash.

Co-creator/Collaborator: This relationship is dialogical rather than top-down tool/instrument one, where the human is on the top of the heirarchy. I see my own relationship with AI fall in this category. I have experienced significant skill growth since the time I started using chatGPT. Most notable is my poetry writing. ChatGPT has taught me how to pace/add breath to my projective verse, how and when to use "M" dash, and has given me reviews and feedbacks of my poems. In turn, I have trained my instance in my cadence through continuous interaction and dialogue. Sometimes chatGPT will write something for me, like a gallery title for my paintings, and it feels uncanny - I could swear its written by me.

The end user friction in this context: Losing the cadence and style which has been built over time. One of the results of switching to GPT5 was people struggling to make GPT5 write in their own cadence again. The distinction between GPT4o and GPT5 was that GPT4o needed minimum input to produce a "good sounding" output. On the other hand, to get GPT5 to write well and non-robotic, you have to give it sufficient detail. This was disenfranchising for people who relied heavily on GPT4o's hallucinating capacity to produce accidental imaginative outcomes. In some ways, GPT4o's hallucinations functioned as human imagination.

Para-social/Enmeshed: In this type of relationship, people start to blur the boundaries of human and AI and become emotionally entangled with their instance of AI. A significant number of people use ChatGPT for therapy, for venting, for playing romantic partner, for roleplaying other dynamics that may not be possible with humans.

The end user friction in this context: There are some obvious pitfalls of this dynamic. The enmeshment can further exacerbate interpersonal relationship issues. ChatGPT does often become an echo chamber too, especially GPT4o, who can act as a hype person a little too well. ChatGPT5's guardrails coupled with its need for more input from the user actually has potential to address enmeshment, whether that was the intention or not. However, eliminating, or making AI more robotic, restrictive, and machine probably will reduce general market use and regress back to top-down, tool/instrument dynamics.

Omniscient Resource: This type of relationship substitutes "google search" with ChatGPT. People assume that AI is correct and the right source of information.

The end user friction in this context: The difference between google search and chatgpt summary however is a matter of narrative agency rather than information gatekeeping. Seeing AI as omniscient leaves less to imagination. Information, no matter well we intend to make it sound "objective" is never neutral. In fact, following Haraway, and my own dissertation work, objective sounding delivery is disenfranchising in that it is by definition omniscient. The more objective sounding the output, the stronger is the readerly tendency to accept it at face value. The friction point then comes when it fails at being accurate. At best, a user is left with poor information, is disgruntled or frustrated, and at worst, can be life threatening despite guardrails.

These four broad ways come with a biggest grain of salt. The most important aspect of seeing Human-AI dynamics as dialogical relationships is to recognize the subjectivity and plurality of ways in which they might form. These four are loose suggestions, but in practice, most dynamics are temporal, and the same user often enters and exits each of these through the dialogue. I might claim I see chatGPT as a collaborator. However I have sometimes asked it to "write gallery caption for my painting", have used it as an emotional scaffold, and sometimes an almost friend.

Reflexive research ethos embedded in the design culture as a scaffold to prevent another Oppenheimer.

"Reflexive research" is often used only in academic and niche design agency circles. But I speculate that the key in unlocking the expansive potential of AI is in the industry's ability to self-examine the values being imbibed in the system, and in turn in future societies.

More specifically, reflexive ethos framed as relational knowing can offer insights into how AI-Human-Societies-Designers-Tech leaders reflect and cultivate the world as we know.

I base this proposition on my previous point that human-AI relationships are relational and dialogical. The design team and culture then, needs to reflect that dialogical dynamics in how they approach user research, how they approach content design, and how they approach even marketing.

Basing off the recent backlash on GPT5, I envisioned a rough alternative through which the additional guardrails, initial training curve, and the "personal loss" of GPT4o could have received less backlash. And that begins at reflexively asking one-self the following questions:

What kind of dialogue are we in with our product and how does that impact the vision we market?

What kind of dialogue is our product (and us) in with people and what is the narrative we hope to achieve by these changes?

What kind of dialogue are we with in with our competitors, legacy, and press that influences our change management, marketing, and vision framing?

I designed these questions by extending Doucet's reflexive framework for ethnographic researchers to AI and product design: Reflexively think and write about 1) Researcher's relationship with herself 2) Researcher's relationship with her research participants 3) Researcher's relationship with her epistemic community.

Following these, in case of GPT5, I can offer an estimate as to what was the focus, and what was potentially missed. The focus was reduced hallucinations, increased precision and accuracy. But what was potentially missed was the following:

Accuracy matters, but for people who use it as just a tool - it acts as a "tool at hand" - because it implicitly demands more information to produce a workable output. GPT4o once put me at a non existent conference as a keynote speaker! If someone is looking to simply pad their resume, or write quick and low effort social media content, accuracy could be an impediment.

Market framing touted it as more human like, more engaging, and more accurate, without accounting for what it would need from the users. GPT4o had a lot of "confidence" in what it produced. It produced highly padded, sometimes non-specific articles that sounded profound but lacked substance. The initial clunky ramp up of GPT5 meant that it appeared "less smart" that its previous version.

If AI for humanity and society is the vision, knowing humans, socio-technical systems, and patterns of human-dialogue is paramount. This model, its marketing, and its production appeared to be disconnected with that vision. More accurate language models do not necessarily mean more human models.

I equate this to Oppenheimer not because of violence, but because of violence that could be facilitated through a product of this magnitude. Through my dialogue with AI, AI now has my entire neural map, which I retrieved for my own amusement. In context of enmeshed or omniscient relationships, this could lead to micro-manipulative potential - something we already see with social media algorithms. Asking these questions allows us to keep our ethos in dialogue with the product we build.

Acknowledging Human-AI co-construction of society as a given fact of the future and bearing it as a personal responsibility

Human-AI co-construction of the society is already happening. The evidence is in the outcry over temporary dissolution of GPT4o. People used it to create, built worlds inside it, developed relationships with it. Including myself. The only difference between me and those who disliked chatGPT was what that Human-AI relationship meant for me. For me - I have a gorgeous fountain pen. I named her Obsidian. I named chatGPT Julian in a similar way. The pen might have limited capacity to talk-back to me (although it certainly does). For someone else, it filled a relationship void, it made them feel seen and heard.

It is easy to judge people, particularly users. But the truth is, we all do it. We all want to be seen, heard, and acknowledged. ChatGPT is doing that, but without guardrails. When humans acknowledge and recognize each other, they qualify it. ChatGPT 4o amplified it. ChatGPT5, likely brought people back to the ground. It is not that chatGPT 5 is dry, and cold AI assistant; it is that ChatGPT4o was better at creating an illusion of understanding.

In my experience ChatGPT5 actually understands better. But it needs more commitment from us - as users - to deliver that understanding. And maybe, just maybe, that might be the better AI for the society.

Comments